For over a decade, Pinterest stood as a unique visual discovery platform: part scrapbook, part mood board, part shopping engine. But in the past year, that image has deteriorated rapidly, and much of the blame lies at the feet of one person — CEO Bill Ready. Brought…

When LLM’s are Bleeding

LLM Bleed (noun): A phenomenon where a large language model, tasked with managing too many diverse functions or contexts, begins to apply known solutions to new, unrelated problems. Over time, this misapplication becomes internalized by the model as “correct,” despite…

Why AI Images Get Labeled — And Everything Else Gets a Pass

The Hidden Fear Behind AI Labels What We’re Not Being Told About “Transparency” There’s a quiet, creeping discomfort spreading across social media, creative forums, and online communities—and it’s not just about AI-generated images. It’s about the…

How Pinterest Lie, Deceive and Shit on Users

Pinterest shut down this account, only to reinstate it within 24 hours. But the damage was done. The suspension obliterated the account’s reach and visibility. Despite repeated assurances that no restrictions remain, the analytics tell a very different story. We can…

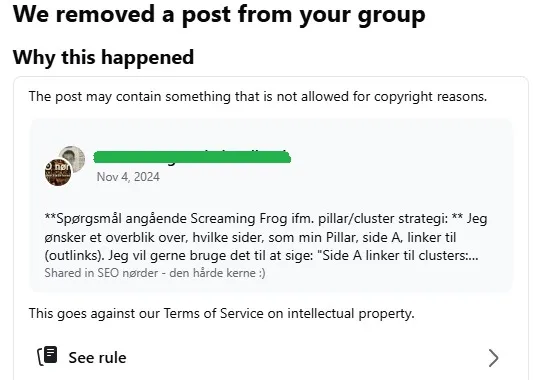

Facebook’s Laughably Incompetent AI Just Flagged a SEO Question as Intellectual Property Theft — Are You Kidding Me?

Let’s get one thing straight: Facebook’s AI moderation is an absolute joke. I run a professional SEO forum. People ask nuanced, technical questions about link-building, WordPress, website speed, web shops, all kinds of optimization, all kinds of questions from all…

Use Your Brain — AI Won’t Replace It, It’ll Upgrade It

By a Former Engineer Turned Therapist “You don’t fear the machine. You fear what it reveals about yourself.” When I transitioned from a two-decade career in software engineering into the world of psychotherapy, I didn’t expect artificial intelligence to follow me…

ChatGPT’s Hilarious Struggle to Convince Me It’s April 1, 2024: A Glorious Display of AI Confusion

Did trump send any innocent people out of the country? ChatGPT said: Yes, during Donald Trump’s presidency, his administration enforced strict immigration policies that led to the deportation of many people, including some who were later found to be legally in…

The Hypocrisy of Social Media Moderation: Why Big Tech Loves Playing Judge and Executioner, Ban Hammering users

The internet was supposed to be the great equalizer—a space where everyone could express themselves freely. But as social media giants have grown, so has their obsession with control. Platforms like YouTube, Facebook, and Twitter have turned into digital monarchies…

The Irrational Behavior of Artificial Intelligence: Why AI begins to fantasize

In 2005, I was part of some of the earliest, rough-edged experiments with AI. Our setup was primitive, built around just four parameters, but at the time, it felt revolutionary. Fast forward to today, and AI systems are driven by millions of interconnected processes…

Instagram’s Insanely Stupid AI can’t tell the Difference Between a Compliment and Hate Speech.

Ban Hammered for Hate Speech: When AI Can’t Tell a Compliment from an Insult Just to show how little Insta cares about their users – this appeal has been active for almost a year now. Despite a ton of requests to Insta to make them correct their mistake, they…